Model artifacts: the war stories

If you were around for my previous post, I started out by asking about what you do with your model artifacts after you’ve trained a model — when starting the journey to production. A day later, I asked the same question on twitter:

❔Folks who are training models at work: how do you manage your model artifacts?

— Neal Lathia (@neal_lathia) September 4, 2020

Here are some snippets of what I’ve learned so far.

At an early stage, models are managed manually

Google Drive. Blobs in s3 and Cloud Storage. A raft of Docker containers. Spreadsheets to know which one is where. When someone said “this has always been a struggle,” I felt their pain.

There are definitely two modes here: (a) managing models during experimentation, to compare them to each other and pick the “winner” and — sometimes separately — (b) figuring out what to do with artifacts that need to be shipped to production.

➡️ I’d love to see some page view stats for the AWS boto3 and Python google.cloud docs — I’m sure we’ve all hung out there several times.

Many folks end up building a model store

There are some pretty awesome sounding systems out there. Here’s an example from Luigi (who, by the way, runs this course on Sagemaker).

We built a model storage solution with Postgres + s3 and an API on top of this. Our internal model training lib calls the API to store the model artifacts on s3 and add entries to the db that track metadata. Lets us leverage library-specific storage through a generic interface.

— Luigi Patruno (@MLinProduction) September 5, 2020

Similar examples mentioned Cassandra, BigQuery, Nexus OSS, MetaFlow, creating pip-installable packages, and more. Some great stuff! But when Hamel said that he’s currently “stuck in the other 95% of Data Science” it reminded me that many teams out there are doing their jobs and building the enablers they need to do their jobs. Put another way, they’re laying the tracks ahead of the train.

➡️ It struck me that, in particular, everyone’s build of this type of system needs them to reimplement serializing models into files.

“We use <insert company> for everything”

Finally, there are some companies who got regular shout outs, such as Weights & Biases, Databricks’ MLFlow, Azure ML, and Pachyderm. I have not used these directly, and am investigating further — so far, it appears that I would need to start, each time, by understanding their fundamental abstractions.

➡️ With so many end-to-end ML platforms popping up, I’m reminded of enterprise software. Well, I’m not old enough to have lived through that myself 😅 but it sounds similar.

An early peek 👀

Based on what I’ve seen — and what I’ve experienced — I’m finishing up a simple Python library to cater for those early stage teams who want to start managing their choice of models in their choice of storage. This can be the first step towards getting those models into production!

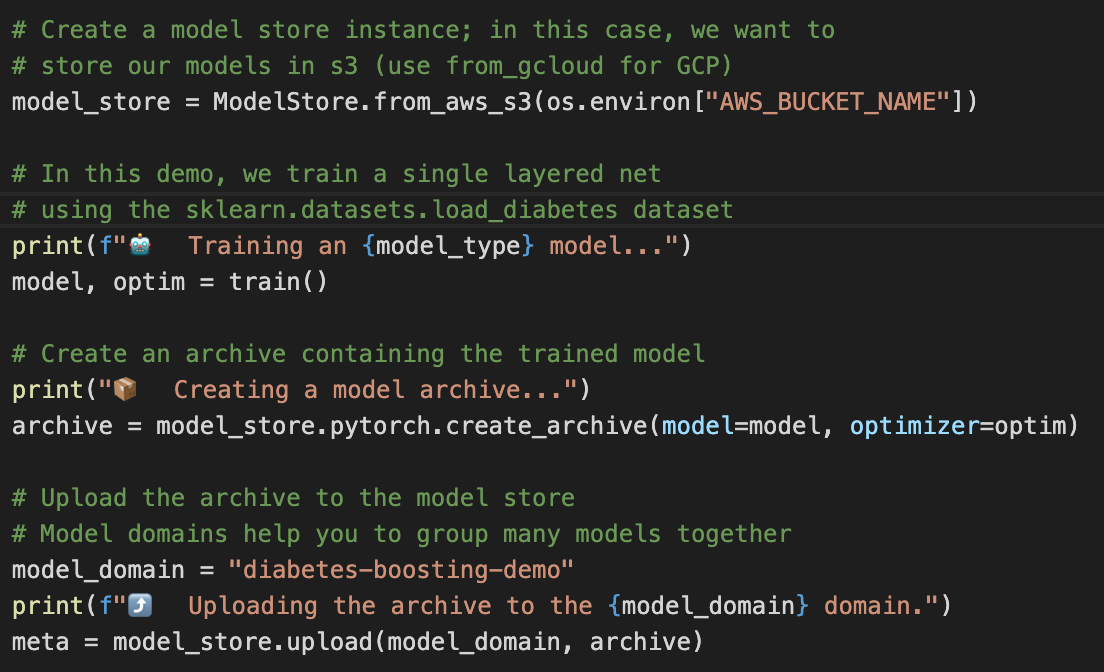

Here’s a sneak peak of what it currently looks like:

Hidden, under the hood, is:

- A common interface over any kind of storage, starting with: file systems, GCP cloud storage, and AWS S3. The library takes care of storing model artifacts in a structured way in each of them.

- All that serializing code that everyone is re-writing, starting with: scikit-learn, xgboost, PyTorch, Keras, and catboost (because of Simon) 😸<

- Collection of meta-data about the Python runtime, dependencies, and git status of the code that was run. Right now, it gets returned to you at the end of the upload.

Do you want to take it for a spin?

In the coming weeks, I’m aiming to release the earliest version of this. It’s probably a bit rough around the edges, and we all know that Python on your computer is not the same as Python on my computer. I’ve written a ton of tests, but —

Github needs a "works on my laptop" repo sticker https://t.co/lAFceKgR1q

— Neal Lathia (@neal_lathia) August 26, 2020

If you want to give this a spin, drop me an email 🙏

Want to discuss more?

The one bigger take-away from this work I’ve been doing is that there are so many of us who are working through similar problems when building ML systems. If you want to chat about this and share your pains / ask me any questions — feel free to reach out!